DEW1. Digital Image Basics

Chapter 1

Of our five senses, sight provides near-instantaneous information over a large range of distances and space. So it is no coincidence that people use a plethora of images to convey a rich variety of data: photographs, maps, drawings, paintings, movies, text documents, signs, etc.

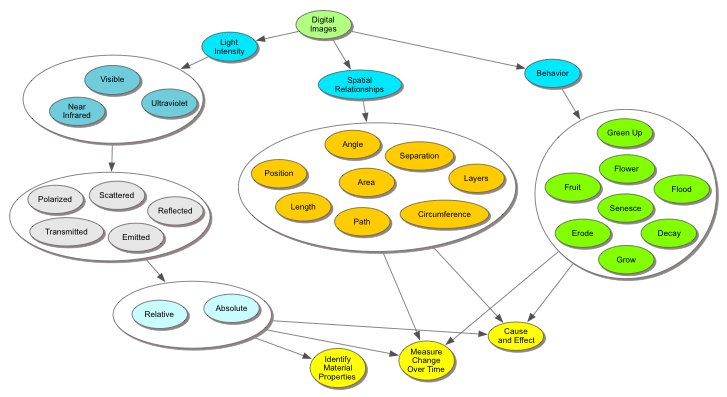

With the inventions of the computer and the Internet, images are now more than memories. Each contains millions of color intensity measurements organized spatially allowing us to measure the location, motion, orientation, shape, texture, size, chemical and physical properties of objects in the images. Image analysis software provides ready access to these data. There are nearly unlimited uses of digital image data. Every aspect of industry uses digital images creatively. The caption in the image to the right has examples of types of data that can be found in a digital image.

I. What Is Light?

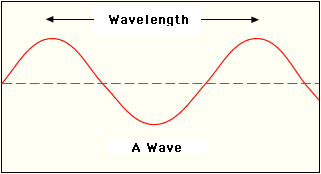

Another name for light is electromagnetic (EM) radiation, which is a stream of photons emanating from a surface. Photons are packets of energy that have characteristics of both waves (oscillating electric and magnetic fields) and particles (the photons). Each photon has a specific amount of energy that is related to the wavelength of the EM waves. The longer the wavelength, the lower the photon’s energy.

DEW1.1. Investigation:

How Is Light Created?

The movie below, created by NASA, shows five ways photons of light are emitted (given off) by atoms and molecules through interactions with electrons in those atoms and molecules.

NASA video about five ways that light is created, created by the Cassini-Huygens Satellite Mission to Saturn outreach team at NASA’s Jet Propulsion Laboratory (JPL).

1.1 What are the five ways of producing photons of light?

Of the five ways of producing light photons, only two generate visible light: electron shell change and blackbody radiation. Since objects need to be at several thousand degrees to emit visible light from blackbody radiation, most of the light we see day to day is light given off when electrons change from a higher to a lower orbital energy state.

II. What Is Color?

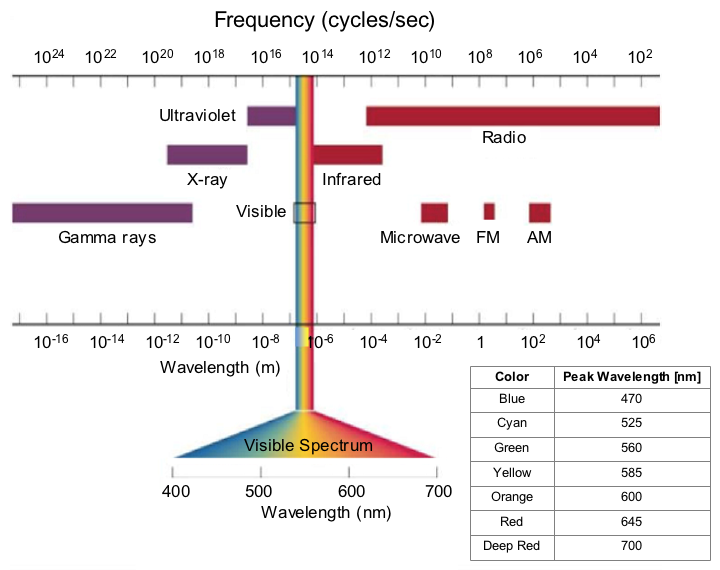

The Electromagnetic (EM) Spectrum

All the colors in the rainbow that humans see… red, orange, yellow, green, blue, violet …are photons in a relatively narrow band of energies of EM radiation.

Photons of violet light have more energy than photons of green light and all other colors. Red photons have the least energy of all the visible light. In terms of waves, red has the longest wavelength and violet the shortest.

Because different atoms only emit or absorb specific energies of photons, color helps us determine the chemical composition of objects: the types of atoms and how they are bonded together in objects determine the colors of light that are absorbed or emitted.

In the reverse of emitting photons, photons of light can be absorbed by electrons. In that process, the electrons move to a higher energy state farther from the atom’s nucleus. Photons are absorbed only if they have the exact energy (the exact color) to move an electron to the higher energy position.

Photons of light striking an object have three possible fates:

- Photons are absorbed by the object

- Photons are reflected from the object

- Photons are transmitted right through the object.

Visible light that isn’t absorbed by an object do not cause the electrons to change their energy state, but the photons can still interact with the electrons and be re-emitted as the same color in the form of reflected and/or transmitted light. Light that isn’t absorbed or reflected is transmitted through the object.

The light that reflects from a surface is greatly influenced by surface irregularities. Most surfaces in our everyday world are quite rough when viewed microscopically, and these surfaces produce diffuse reflection, meaning you can’t see an image in the reflection (unlike a mirror or smooth metallic surface). Most objects we see and photograph have diffuse reflection of light from their surfaces. Adding up the intensity of visible light that is absorbed, reflected, and transmitted will equal the intensity of the light falling on the object. In other words, the percent light reflected, transmitted, and absorbed adds up to 100%. Keeping this in mind while analyzing images allows one to assess material properties of the photographed objects (example are the leaves below).

DEW1.2. Investigation:

LEDs As Light Sensors

Light emitting diodes (LEDs) were made to convert electricity into light, but they can also work in reverse: convert light into electricity. Use LEDs to measure and compare the intensity of red light and infrared light.

III. Paint-on-paper Images vs. Digital Images

When you first learned to paint, at some point you most likely mixed all of your paints together. The resulting color? Something dark—a dark gray, brown, or even black (it depends on the type and variety of paint colors you had). Our early experiences with color mixing were blending together paints where yellow and blue make green and the three colors stirred together make colors ranging from brown, gray, or black. From this we have two errors in our understanding of color. First, primary colors can be mixed together to create all other colors. Second, red, yellow, and blue are the primary colors. But did you ever try to make black out of your red, yellow, and blue? Even more difficult—try to make fluorescent pink, silver, or gold. Primary colors cannot make all other colors, but they can make the most colors from the fewest starting resources.

Later in life we learn that when light from the Sun passes through a prism, we see a rainbow of colors and conversely, the rainbow of colors of light makes white light even though a rainbow of paints made black. We may also learn that for colors of light, there are three primary colors which can be mixed together to create all of the colors of the rainbow.

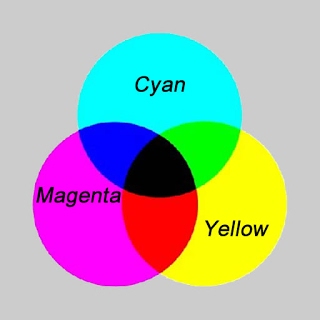

Because colors are produced differently by pigments (or paints) and beams of colored light, different sets of primary colors are used to make the rainbow of colors. In paints, it was red, yellow, and blue, but this has been updated to yellow, magenta, and cyan. this switch became important when color images were printed onto paper.

Primary colors of pigments:

yellow, magenta, and cyan.

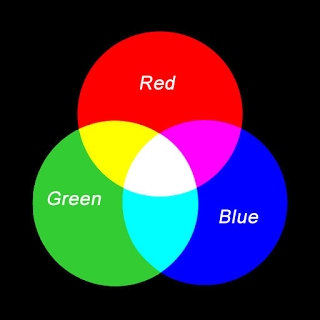

In digital images, the primary colors are red, green, and blue (RGB). The big difference between pigment-on-paper-based images and digital images is that pigments in paints subtract light reflecting from their surfaces, while in digital images, different colors of light are added together by your eye and brain to create new color.

Primary colors of light:

red, green and blue.

Notice that mixing any two of the primary colors of pigments produces a primary color of light (look at the intersections of two circles of color in these diagrams). The reverse is true also: mixing any two of the primary colors of light produces a primary color of pigments. To thoroughly understand the data in digital images, you must be familiar with how colors are created using red, green, and blue light in varying intensities. If you aren’t, try out the investigations below.

In the printing process for the color pages of a newspaper or a color printer, the rainbow of colors is created from four colors of ink: cyan, magenta, yellow, and black. Cyan, magenta, and yellow are very specific shades of blue, red, and yellow and together can create the maximum number of colors. When mixed together in equal parts, the three create black or gray. The black ink is added as a fourth color to use less ink in making darker shades of color.

To see how this works, take a microscope or magnifying glass to a color picture in the morning paper or a magazine.

DEW1.3. Investigation:

Newsprint and Video Displays

With a microscope or magnifying glass examine a color picture in the morning paper or a magazine. Look for the overlapping dots of various sizes and transparency in four colors of ink: cyan, magenta, yellow, and black.

With a magnifying glass, examine your computer monitor or television. Look for the regular pattern of red, green, and blue lines or dots.

1.2 How does the paper print dots compare with what you see on the computer monitor or TV? Do you see a series of overlapping dots of various sizes? What are the colors of the dots?

You may even see a newspaper once in a while where the color layers were not properly lined up so the images appear to be double and the picture colors are not right.

DEW1.4. Investigation: Creating Colors

Guess colors produced by the combination of red, green, and blue light intensity values. Explore how colors are made using the ColorBasics software program.

IV. Naming Colors

People have given many names to the colors they see. When Isaac Newton wrote down the colors he saw in the rainbow, he chose to break them out into seven names. We still use that list of names today, although you may find it difficult to pick out the color indigo or the color violet somewhere in the room.

There are a number of basic color names that people refer to: red, orange, yellow, green, blue, purple, brown, white, and black. But individual people may not agree on what to call a specific block of color. Is it red, orange-red, salmon, burnt-sienna, or watermelon? Naming or distinguishing between colors is a very subjective process. As you study light and color throughout this course, you may find that what you think is pure red has more blue in it than the computer’s pure red. Don’t let that confuse you; when it comes to studying color it is not the name of the color that matters most.

Fun fact: Despite what you learned in paint, when you mix yellow and blue light, the result is white. Likewise, magenta + green makes white; and cyan + red makes white.

DEW1.5. Investigation:

Dueling Light Beams

Make and mix your own beams of colored light to see what happens.

V. Computer Displays

Color television and computer monitors use the three primary colors of light to display thousands or millions of different colors. If you take a magnifying glass to your computer monitor or television, you will see a regular pattern of red, green, and blue lines or dots. Each of these glows at varying intensities, just as a color printer drops varying amounts of ink. In both cases, what you perceive is the mixing of the primary colors and up to 16.8 million different colors on the screen.

A number of different technologies have been invented for computer displays or monitors. Long ago the cathode ray tube (CRT) was most common. Then the liquid crystal display (LCD) became common especially in laptop computers. You may expect technology companies to keep coming up with better and better display systems, but it’s likely they’ll still be making a screen glow in the three colors: red, green, and blue (RGB).

VI. Pixels

You see color on things around you because light shines into your eye, is received by the cones and rods of your retina, and is converted to electrochemical signals that are then processed through your brain.

A traditional film-based camera records an image onto photographic film made of transparent plastic coated on one side with a gelatin emulsion with tiny light-sensitive silver halide crystals embedded in it. How sharp and what fineness of detail a film photograph can have depends on the sizes of the crystals. The smaller the crystals, the finer the detail that can be captured on that film. The fineness of detail in photograph is called its resolution.

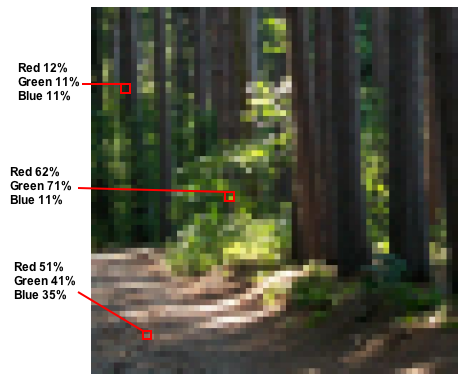

A digital camera detects color because light shines on sensors in the camera which are sensitive to red, green, and blue. The number of sensors in the camera will define the highest sharpness (resolution) possible for that camera. The term pixel is a truncation of the phrase “picture element,” the smallest block of color in a digital picture. The term is also used for the smallest block of color on your computer display.

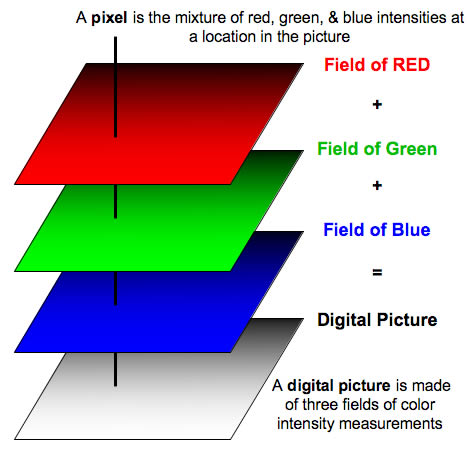

The digital camera records the red, green, and blue intensities for each pixel into a numerical file – the values of color and position of the pixel are defined with numbers. To display the image on the computer screen, the computer takes the red intensity value for a particular pixel from the file and shines the red component of the pixel at that amount at that place on the computer screen. It does the same thing for the other two primary colors (green and blue) for every pixel in the image.

(larger pixels and fewer of them)

The resolution of an image refers to the number of pixels used to display an image. A higher resolution image uses more pixels and allows for more detail to be seen in the picture. Scanners and printers will often advertise their resolution in dots per inch (dpi), which is the number of pixels per inch that they are capable of recording or depositing. A document printed at low resolution (fewer dpi) has jagged steps of dots that make up a curve like the letter “O”. From a high resolution printer (more dpi), that same letter looks like a smooth circle.

The first image of the rubber duck above has much higher resolution than this image of trees in a forest as you can see by comparing their “blockiness.” Pixel sizes in the forest image are much larger than the pixels in the duck image. In the forest image, the percent intensity of each color in each pixel is based on a scale with 0% representing no light of that color to 100% representing the maximum intensity of color that may be measured with the camera. In computers, where a binary scale is used with 28 (or 256) levels for each color, 0% is recorded as 0 and 100% is recorded as 255. These images can display over 16.8 million different colors.

For more on how digital cameras work, see digital camera information at HowStuffWorks.com

There are two general ways to consider how color is represented in the image. The first is that each pixel has three primary color intensities associated with it (red, green, and blue), and the pixel is at a specific location in the image. The combined three intensities are most likely created by a chemical and/or physical properties of the object at this location in the image.

The second way to consider how colors are organized in a digital image is that there are three separate layers of color intensities, red, green, and blue, each organized in a two dimensional field. It may be helpful to think about each of these layers as being produced by being in a dark room where only that color light is shining.

These five images are of the same scene but with different size sensors, which create different size pixels.

(twice as many pixels high and wide). Notice how the amount of detail changes with smaller and smaller pixels. You aren’t zooming into the image, just seeing more detail with smaller pixels.

In a sense, it is helpful to think about pixels as uniform color tiles that are often found in bathrooms and kitchens. When organized, they can be used to make pictures.

Below is an example of a hand that looks remarkably different in different lighting conditions. We all look younger using red light, and as the wavelengths get shorter, more detail of the damage caused by the sun and aging are evident.

Left: Hand in blue light.

Right: Hand in red light.

It is easy to forget that a pixel is actually an area that has physical meaning relative to the objects in the digital image. Since the color is uniform across the pixel, we can’t see detail smaller than the size of the pixel. This doesn’t relate just to digital images, our eyes have limited resolution to the detail we can see. For example, we can’t see the stomates in a leaf or the hairs on the legs of a fly without a magnifying glass. At some point, we cannot see detail smaller than the sensors (rods and cones) in our eyes.

Televisions and computer displays have settings for the number of pixels they display. For example, 1024×768 pixels means that your monitor shows a rectangle made of blocks of color (pixels) arranged in 1,24 columns and 768 rows, for a total of 786,432 blocks of colors. This is less than one megapixel (one million pixels). In digital camera resolution, this would be a very low resolution camera on today’s market.

Electronic sensors detect photons of light and create a voltage that represents the intensity of light hitting the sensor. Although our eyes aren’t electronic, they act similarly – rather than voltage, the light sensors in the eye create chemicals that tell the brain how much light is being seen. See an animation on the basics of light detection for more detail, read about the basics of digital cameras, or see the digital camera information at HowStuffWorks.com.

A number of different image file types are used to compress the data so the image computer file takes up a minimal amount of computer memory.

DEW1.6. Investigation:

Resolution and Pixel Color

Use DigitalImageBasics software increase and decrease the resolution of the image and see how many pixels are necessary to recognize the picture’s subject. Also examine how the pixel color values change at different resolutions.

The movie below was created for Digital Earth Watch by Marion Tomusiak, Museum of Science, Boston:

The intensity of light measured by a camera depends on three factors:

- Does the object emit visible light? Objects that emit visible light are usually very hot–the sun, light bulbs, etc.

- If the object is not emitting light, then what is measured depends on the quality of light shining on the object. For example, at sunset, there is very little blue light remaining in the sunlight, so clouds become red and orange (depends on how much green light has been removed by atmospheric scattering).

- Position of the camera with respect to the source of light. Is the light shining through an object, so the camera is measuring the amount of colors that are passing through (transmitting through) the object. Otherwise, the camera is measuring the proportions of light reflected off the surface of the object.

VII. Digital Cameras

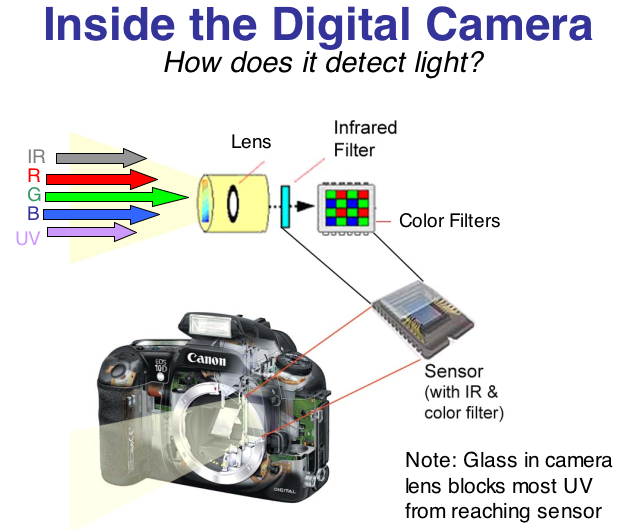

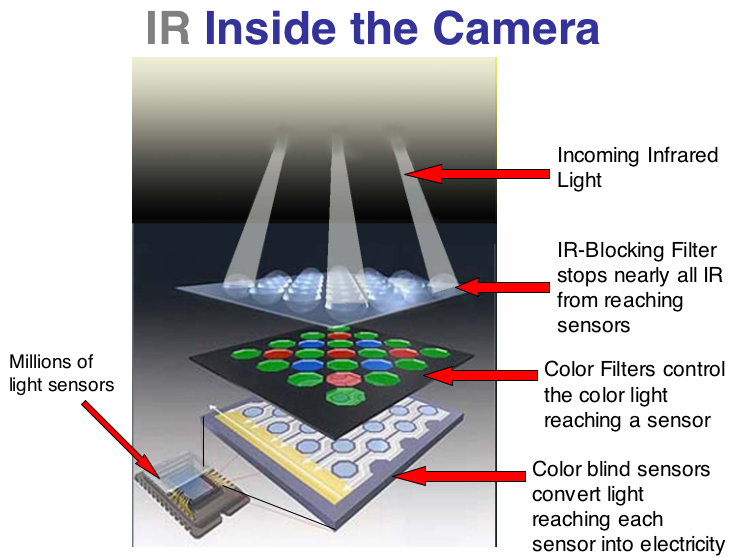

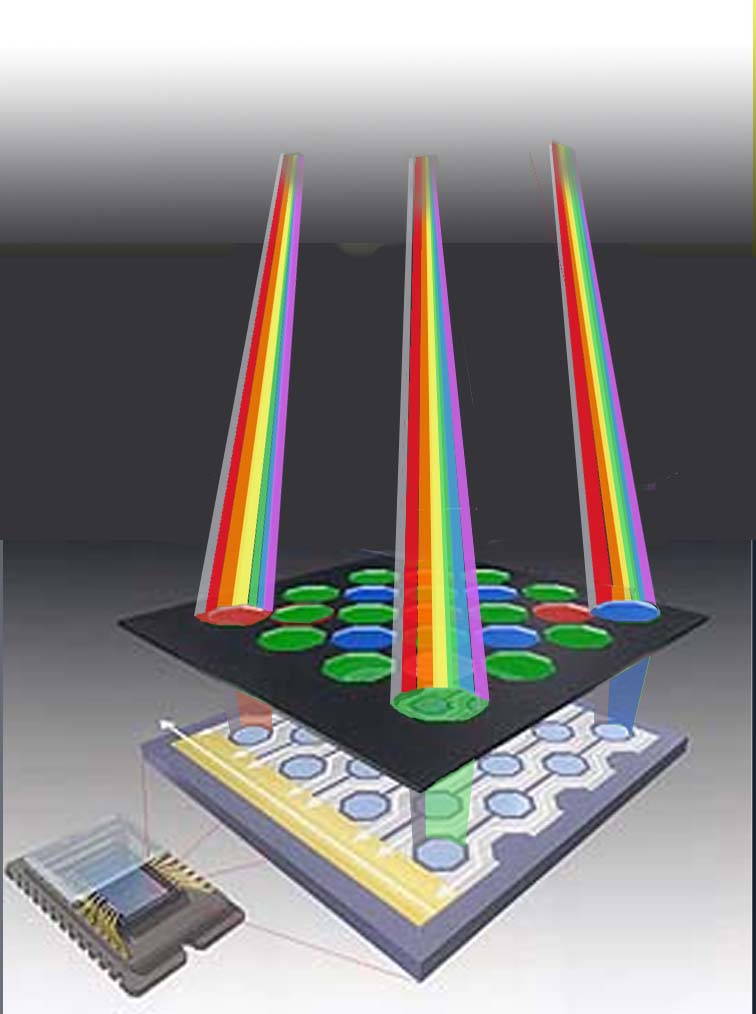

A wide range of light that may be detected with a digital camera — all colors of visible light, the near Infrared (IR), and ultraviolet (UV)— enter the camera through the lens.

Since most digital cameras have a glass lens, and glass is nearly opaque to UV, most UV is absorbed before it enters the camera.

What happens to the near infrared light entering the camera?

Near infrared doesn’t make it to the camera sensor because it is blocked by the infrared-blocking filter, which lets the visible light through.

The visible light reaching the sensor is controlled by small color filters as shown in the diagram below. The red filter lets red light

through, so only this light is hitting the small sensor underneath it. Similarly, the green and blue filters control the wavelengths of light reaching the sensors beneath them.

it passes though color filters.

VIII. Seeing Only One Color of Light

When we talk about seeing only one color we are not referring to the condition known as color blindness. People who are colorblind have difficulty distinguishing between certain colors, for example red and green, but they are still capable of perceiving light of both colors. What we are talking about is if you could only see the small range of light wavelengths that is called “red”. You would be unable to perceive the spectrum of light including orange, yellow, green, blue, and violet.

Seeing only two colors of light: the image at left of a rubber duck has the complete set of red, green, and blue colors, while the one below has only red and blue colors.

Right: Image of rubber duck with only red light displayed.

IX. Benefits of Digital Images

There are many ways to use digital images to measure variables of objects in an image and share data with a larger community.

In order to do so efficiently and accurately, you need to be familiar with the fundamental ways data may be accessed spatially and spectrally.

When there are multiple images of the scene, then changes of the spatial and spectral variables are possible.

Before we go on to the next chapter about how measurements are made using digital images, lets briefly look at two more color questions….

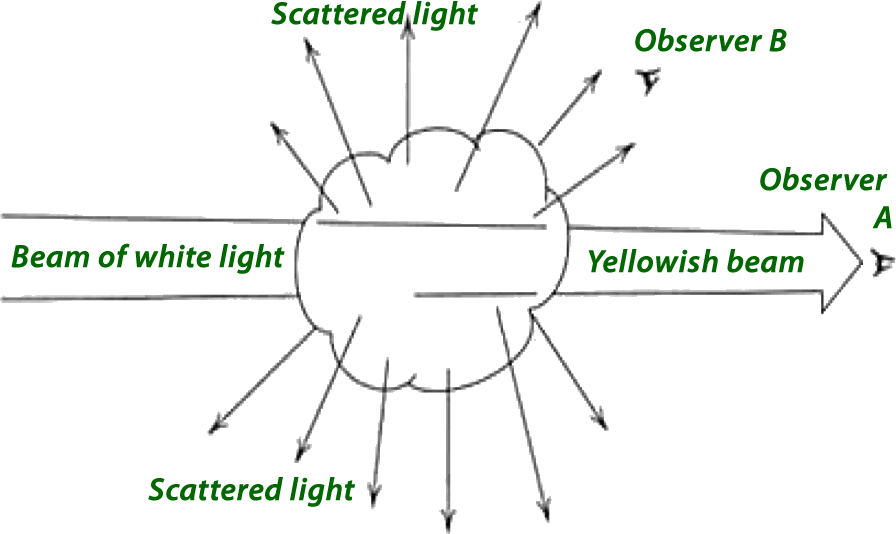

Why Is the Sky Blue?

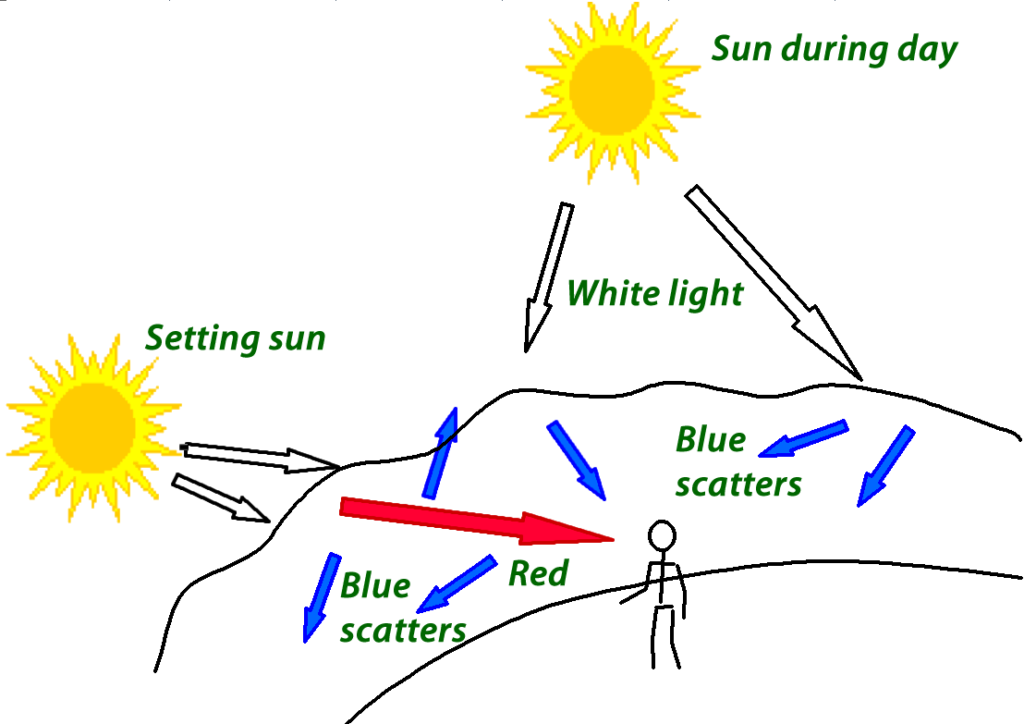

The Sun produces white light, a combination of all of the colors of the rainbow. When we view sunlight through a prism, we bend the light so that it is separated into all of the colors. When sunlight shines through our atmosphere the same process occurs; it is bent and scattered by particles. Our atmosphere, because it is largely made up of nitrogen and oxygen, is most efficient at scattering blue wavelengths of light. What effect does this scattering have what we see in the atmosphere?

If you look back at what you discovered from experimenting with color, you saw that if we remove blue from our white light, the color that we are left seeing is yellow. So looking directly at the Sun, which we do not recommend, the Sun would theoretically have a yellowish hue. But the main reason the sky appears blue to us is not that the blue light is removed—it’s that the blue light is scattered by the predominant molecules in air: nitrogen and oxygen.

Why Are Sunsets Red?

We all know that the sky is not always blue. At sunset and sunrise, the sky includes many more colors from throughout the spectrum.

Other colors of light are scattered by our atmosphere, but not as efficiently as the blue. When the Sun is high in the sky, its light has a shorter distance to travel through the atmosphere and the blue light is scattered. When the sun is low in the sky, the path is much longer, the atmosphere through which the sunlight has to travel is much thicker and even more of the blue light is scattered. The remaining reds, oranges and other longer wavelength colors are left to reflect off anything in the atmosphere especially clouds. It is particular arrangements and mixtures of cloud types that make the most vibrant sunsets is any observer who is lucky enough to see or takes the time to enjoy a memorable sunset.