DEW Formative Evaluation of Adult Learning

By Jeffrey S. Beaudry, Ph.D.

Program Evaluator

University of Southern Maine

Lesley Kennedy

Lead Educator

Museum of Science-Boston

Mavourneen Thompson

Portland, Maine

I. Introduction

Program evaluation of the Measuring Vegetation Health, renamed Digital Earth Watch (DEW), incorporated both formative and summative evaluation components. The overall program evaluation will be discussed in the chapter, DEW Program Evaluation, but formative evaluation is discussed in this chapter as a separate topic. Summative evaluation aimed to answer overall questions of the quality of DEW products, effects of working groups and workshops, and to inform decisions about program continuation. Summative evaluation occurred at the end of each year, and was especially important at the end of the entire five-year grant cycle. Summative evaluation questions focused on the overall success of the program, across all of the DEW partners. Formative evaluation aimed at more immediate improvement of processes and products during development. Interview, observation and survey data was collected and used for immediate feedback and improvement of DEW program components.

Formative evaluation focuses on specific processes, activities and/or products with the goal of improvement. “Formative evaluation provides evidence about how to improve a program as it is developing” (Beaudry and Miller, in press, p. 199). In this paper we describe how to use formative evaluation to improve the working groups overall, with specific goals to improve individual learning stations, image analysis software, and the DEW website. Details will be provided about the use of reflective writing and feedback in the specific context of informal science education working groups with science teachers and informal science educators as the target audiences.

Our overarching question was “How do we create learning experiences that help educators grasp these ideas through inquiry, and with respect to what they are required to teach?” (Kennedy and Beaudry, 2006)

II. DEW Working Group Design: Program, Partners and Professional Development Context

The primary evaluation goal for the second year of DEW grant was to provide formative evaluation to the complete cycle of five working groups. Our definition of a working group is as follows:

“A deliberate professional development format based on models of prototyping/field-testing and designed to explore learning experiences with educators before developing final products and workshops.

Intended to collect data on how the tools work, how adult learners make meaning of them and how teachers think about these tools in relation to their own classrooms” (Kennedy and Beaudry, 2006).

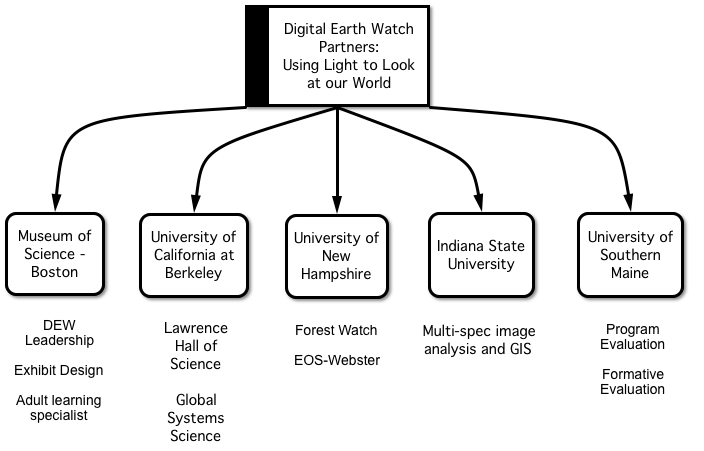

Figure 1: DEW Partners for Working Groups

From April 2005 to June, 2006 each of the DEW Partners hosted and participated in a working group. The resulting series of working groups had the common purpose, to field test, use, and improve these DEW products and processes with formal and informal science educators.

While the first year of the DEW activities could be described as one of activation and engagement of DEW partners in the DEW concepts, the second year has been one of consolidation of DEW concepts. In the third and fourth years DEW goals and activities will concentrate on further refinement of tools and resources and dissemination of materials, tools, and resources into formal classroom and informal science settings.

During the cycle a total of 75 participants attended these working groups, approximately sixty middle school and high school teachers, as well as fifteen informal educators from museums, and informal science organizations. In each working group invited participants and DEW experts and staff engaged as instructors, facilitators and collaborators in activities as adult learners and as professional educators, alternately presenting, listening, reflecting, writing, designing, and questioning. At the end of the cycle, Lesley Kennedy, the DEW educator most responsible for the design of the working groups, captured the collaborative exchange with the question, “Who is teaching whom?” The working groups were intended to engage the invited participants with DEW staff to field test tools and resources with participants, and to maximize opportunities for feedback. Participants’ feedback was incorporated into improvements in DEW tools like image analysis software, processes like the learning stations, and products like participants’ personal investigations.

Working group participants

engaged as learners.

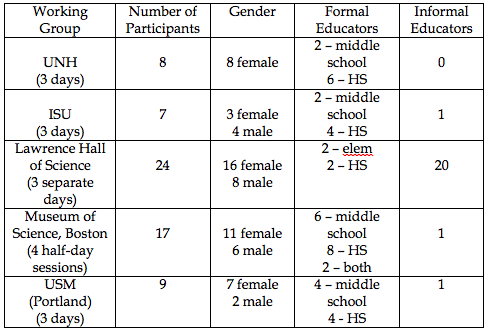

By June, 2006, all DEW partners had presented DEW tools and processes in a working group or workshop environment. Three out of the five working groups (UNH, ISU, and USM) were designed to be small, at most 10 participants, and 3 days in duration. (See Table 1 The working group at the Museum of Science consisted of middle and high school teachers who met for a half-day over the span of four months. All of the working groups focused on opportunities for DEW partners to maximize feedback from formal and informal educators about DEW tools and processes. The working group participants at LHS were predominantly informal educators.

Many of the participants were only available for a day, but wanted to participate. The first day of the LHS working group had the largest attendance, 27 attendees, but the group was down to eight by the third day. A total of 65 participants attended the working groups, with a blend of middle school and high school, and formal and informal educators in the audience.

Table 1: DEW Working Groups – Summary of Participants

As planned, an even proportion of middle and high school teachers attended the working groups, but approximately 75% of the participants were female. The UNH working group was filled with teachers already familiar with the Forest Watch program (http://www.forestwatch.sr.unh.edu/). The ISU working group included a wide range of teacher background with GIS and remote sensing, with one teacher very familiar with applications of Multi-spec Image Processing.

At Lawrence Hall of Science (LHS) the largest contingent of informal educators attended the working group. Many of the informal educators had advanced science degrees, and worked in a variety of informal science settings, museums and science centers. A general audience of middle and high school science teachers attended the working groups at the Museum of Science, Boston. A mix of middle and high school teachers attended the University of Southern Maine working group in Portland, ME; joined by one informal educator. The heterogeneity of the working groups was intended to balance the review of the DEW tools and resources.

The resources and materials used in the working groups consist of image processing software, investigations, and hands-on materials developed by DEW Partners. Each partner has an established record of science education programming, and the synthesis of these efforts is yielded a collection of image processing software, investigations, and hands-on materials which now resides on the DEW web site (http://DEW.sr.unh.edu) which was launched and populated with the full set of materials and resources in November and December, 2005. The first version of web site was used to support the LHS and MOS working groups, and a second-generation web design was implemented for the working group at USM in June, 2006. Each DEW Partner has a link on the DEW web site, so that UNH has both Forest Watch and EOS-Webster linked, Indiana State University has the GIS link, and Global Systems Science is linked as well.

III. Informal Science Education, Adult Learners, and Inquiry

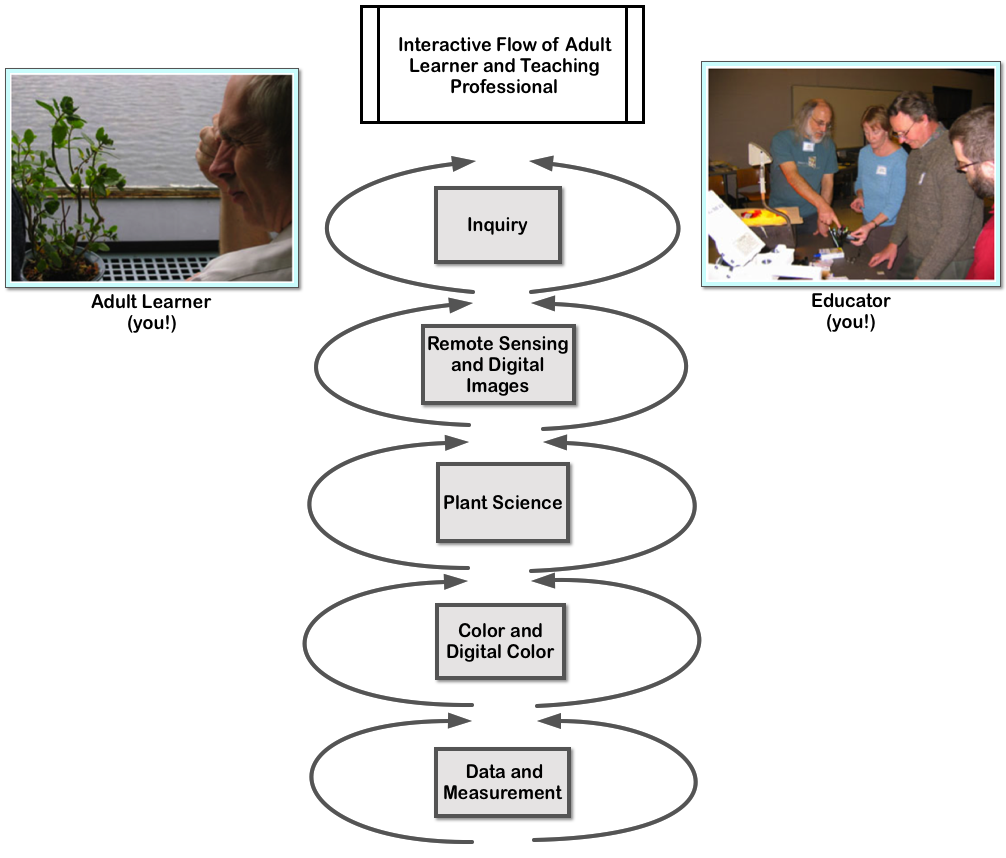

This section reviews our design of the working groups for adults, with an emphasis on the value of formative evaluation. In particular we designed and implemented 1-3 day working groups for teachers and informal science educators. We sought to build connections and develop a deeper understanding of the big ideas associated with the DEW grant: data and measurement, light and digital color, plant science and vegetation health, remote sensing and digital imagery analysis, and inquiry (environmental monitoring for investigations). See Figure 2.

Figure 2: The interactive flow of adult learner and teaching professional

The strategic balance of two perspectives, the adult learner with the practicing science teacher, provided a dynamic structure for engaging both presenters and participants. Over the three-day, working group multiple opportunities and specific times were designated to incorporate each of these strategies. The basic structure for the first day of the working groups followed the two-part strategy very closely. After the introduction to the working group, participants rotated through a series of three learning stations.

After each station, participants returned to their computers to provide feedback on the web-based surveys. Another brief lecture and demonstration took place in the morning, but over half of the time in the afternoon was devoted to making classroom connections. During the remainder of the time working group presenters and teachers were led by evaluators in a guided, reflective discussion of the participants’ experiences to the learning stations. The activities on the second and third days followed the same basic pattern with some modifications and adjustments.

The literature on informal science education is challenging, due in part to the unique context, conditions and outcomes of formal (school and university-based) and informal (out-of-school) science education, and the commonalities between the two approaches. The DEW Grant reflected the two orientations, formal and informal science education through the selection of partners. Formal science learning was represented by UNH, ISU, and USM, and the informal science learning was represented Museum of Science, Boston, UC-Berkley’s Lawrence Hall of Science, and the Blue Hill Observatory. While DEW partners represent both approaches, they often overlap, and operate in complementary ways.

The overall goal of the DEW grant was to develop tools and resources for formal, classroom and informal science education. Relevant themes represented in the informal and formal science education literature point to a number of key concepts.

1. New paradigm of integrating teaching, learning, and assessment

2. Big ideas used to organize curriculum, content and inquiry

3. Acknowledgment of shared expertise of classroom teachers and DEW scientists

4. Use of formative evaluation to improve working groups

5. Use of integrative, reflective writing to reveal participants’ learning progressions

6. Authentic relationships between formal and informal learning

7. Emphasis on disciplined inquiry

Science is a field that combines content knowledge, with inquiry and research to explore and understand questions. According to Sheperd (2000) the current paradigm of curriculum theory, learning, and measurement emphasizes the need for both challenging content learning integrated with reasoning strategies like problem solving and inquiry. Science teachers, as learners in the working groups, needed to be socialized into the discourse and practices experts in the subject matter disciplines (Engle and Conant, 2002). In a reciprocal way, university experts needed to understand the limitations of classroom teachers, high-stakes testing, alignment with science standards, and inconsistent access to technological tools like computers, hand-held measurement devices, and regular access to outdoor learning.

Developing authentic relationships between in-school (formal) and out-of-school (informal) learning is another valued component of curriculum. The DEW grant partners have authentic programs linking K-12 schools with UNH like Forest Watch, and ongoing museum-school partnerships at the Museum of Science, Boston and at Lawrence Hall of Science, UC-Berkley.

In 2004 the report of the National Academy of Science Committee on Test Design for K-12 Science Achievement made explicit connections between teaching, learning and assessment. The purpose of the study was “to suggest ways of using research on children’s learning to improve assessments, including both large-scale national and state level and classroom assessments” (Smith, Wiser, Krajcik, and Coppola, 2004, p. 4). Their biggest recommendation was that focus of teaching should be on big ideas to bring coherence to curriculum and to help “teachers align curriculum task(s) with learning performances” (Smith et al., 2004, p. 5). In addition, the big ideas are organizers for assessment. Big ideas in the DEW grant are vegetation and environmental health, remote sensing, light, and digital imagery analysis. Big ideas “are a source of coherence among the various concepts, models, theories, principles and explanatory schemes to different classes of phenomenon within a discipline. They also provide insight into the development of the field and are links between different disciplines” (Smith et al., 2004, p. 5). The big DEW ideas were designed in and kept constant through the series of five working groups. This did set up tensions for teachers in Massachusetts, Maine, California, Indiana, and New Hampshire New who are responsible for specific state standards.

Professional development and teacher learning were essential aspects of the DEW DEW grant activities, although there were no specific student outcomes. Borko (2004) adopted a “situative” perspective to characterize the field of research on professional development. To understand the link between participation in professional development and the impact on student learning Borko suggests that teacher learning is a combination of individual understanding and growth and socialization and en-culturation into the practices of experts in the field. A clear area of expertise and practice for the working group presenters was university research program, Forest Watch. The school-university partnership focusing on the study of white pine fostered growth of new practices in classroom teaching and learning. However, DEW working groups were designed to extend the Forest Watch model of school-university research partnerships. The working groups allowed DEW facilitators to work with middle school and high school teachers to pilot test and use new DEW tools and resources, such as image analysis software. The DEW Grant used a professional development model for the working groups in which both teachers and facilitator/presenters were seen as experts and as learners, a shared expertise approach (Engle and Conant, 2002). In addition, participants were considered experts when it came to making classroom connections about the feasibility of using DEW tools and resources.

The purpose of the working groups were as much for DEW presenters to learn what teachers knew about the key DEW, big ideas, as they were to foster disciplinary engagement of teachers. Disciplinary engagement means “that there is some contact between what the students are doing and the issues and practices of a discipline” (Engle and Conant, 2002, pp. 402-403). DEW presenters and participants engaged in dialogue on critical issues such as ozone levels, invasive species, and using plants as indicators of environmental health; new tools were used for inquiry, digital images, image analysis and remote sensing. Some of the inquiry activities were designed, but they were flexible enough to lead participants to his/her own question(s) for inquiry.

Another feature of effective science teaching and learning essential for the DEW working groups is the concept of productive disciplinary engagement (Engle and Conant, 2002).

Focusing on productive disciplinary engagement allows one to trace the moment-to-moment development of new ideas and disciplinary understandings as they unfold in real-life settings. It provides a complementary perspective to views of learning that rely on static comparisons of student understanding with pre- and post-measures. By incorporating content and interaction, this perspective also highlights the ways in which learning is a simultaneously cognitive and social process. (p. 333)

The working groups’ design provided as “productive disciplinary engagement” by placing as many as 4-5 DEW scientists and educators with 7-8 participants. Furthermore, productive disciplinary engagement took place as participants formulated their own questions and designed their own investigations with the support of DEW scientists. A key question for the grant remained, did the self-design of inquiry and investigations promote the transfer of the big ideas to classroom practice or other informal science settings?

The online reflective writing assessment of the participants provided important feedback to presenters and evidence of the development of teachers’ understanding of big ideas. A key feature associated with big ideas of science learning is the notion of learning progressions. “Big ideas can be understood in progressively more sophisticated ways as students (learners) gain in cognitive abilities and experiences with phenomena and representations. They [big ideas] underlie the acquisition and development of concepts to a discipline and lay the foundation for learning” (Smith et al., 2004, p. 5). In the first stage of the working group, planned centers were structured with DEW tools and resources to represent big ideas. After rotating through the learning stations participants learned how to use image analysis software and heard experts describe applications of plant health and environmental health studies. At each stage participants were asked to write in the online assessment and participate in discussions about their understanding of big ideas.

The design of the DEW working groups was done to balance the role of adult learner to acquire and consolidate new knowledge and the practitioner who needs to make connections with professional educat

ion audience and setting.

IV. Working Group Formative Evaluation Design and Results

The DEW evaluation concentrated on providing formative feedback to DEW partners who designed and presented the working groups and workshops. Multiple methods of evaluation were used, including participant/observation at the working groups, coupled with an online assessment tool of working group participants, and interviews of the partners in DEW.

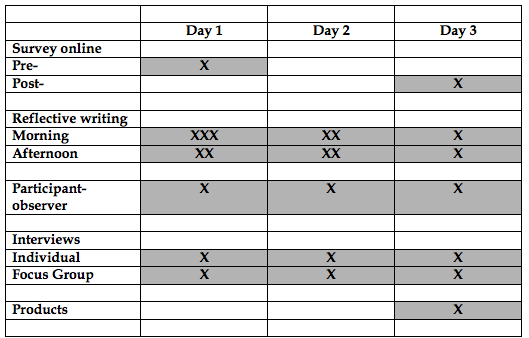

Table 2: Methods Used for Working Groups’ Formative Evaluation

1. Online Survey with Embedded Reflective Writing,

The second year evaluation report is based on primarily on qualitative descriptive methods with some descriptive, quantitative data as well. Data collection methods used to evaluate the working groups included the online, participant survey, focus groups, and participant-observation. In addition, the agendas for the working groups provided evidence of the daily structure of time for content analysis.

The questions for the online, participant survey were developed with content experts and informal educators from the DEW grant, and two evaluators from PolicyOne Research. The online survey questions were scheduled for completion throughout the working group at times that matched specific activities and experiences. The agendas for the working groups include specific times for the online survey, as well as for focus group discussion with participants.

There were a total of 52 questions spread out evenly over a three-day working group schedule. The online survey required all participants to have access to computers during the working group sessions. All participants who attended the working groups logged on to the survey, and over 75 percent of the participants completed all of the questions. One of the reasons for the completion rate was that participants in the working group at Lawrence Hall of Science could choose whether to attend one day or all three days. Participants at all of the other working groups were expected to attend the entire working group, and to complete all of the online survey questions.

Types of questions in the online survey were a mix of closed, fixed response questions, and open-ended, reflective questions. As a pre- and post-measurement of impact, working group participants responded to the same set of closed questions. Embedded in the working group were open-ended, reflective questions about the content and big ideas. The reflective writing prompts were organized by the following core questions:

* “What did you learn?”

* “What questions do you have?”

* “Describe two things that helped you make a classroom connection.”

* “Tell us one thing about yourself as a learner that you want us to know.”

There were separate reflective writing events after each learning center session, approximately once every 1 ? to 2 hours. Participants wrote about the big ideas from each learning center: * Light and light sources

* Digital cameras

* Filtering light (dueling light beams)

* Flowers and filters

For example, the set of questions following the “Flowers and Filters” read, * “One thing you learned about your experience with the Flowers and Filters learning center.”

* “A second thing you learned about your experience with the Flowers and Filters learning center.”

* “One question I have or something I don’t understand about the Flowers and Filters learning center.”

The questions were designed to engage teachers to discuss complex concepts, and to model the meta-cognitive processes representing constructivist learning principles. One of the design principles of the working groups was to engage learning through the identification and development of questions. The questions of value were provided by working group leaders and were based on individual needs and interests.

The concluding questions for each day were about the transfer of big ideas to the classroom and about working group overall. Questions were written to prompt participants to do the following:* What is one way you could use the knowledge you have gained about

color and light in your classroom teaching?

* What are two suggestions for improvement of the working group?

As you can see participants were asked to engage in repeated, focused reflective writing prompts throughout the day, resulting in a constructivist, reflective role for the participants. One of the advantages of the online survey was the immediate output of survey results for feedback to presenters, and it provided a monitor to participants’ completion. Embedding the survey throughout the agenda of the working group allowed for participants to write out his/her reactions to learning experiences. Once the names of participants were removed from the data and the results were provided to presenters for their review.

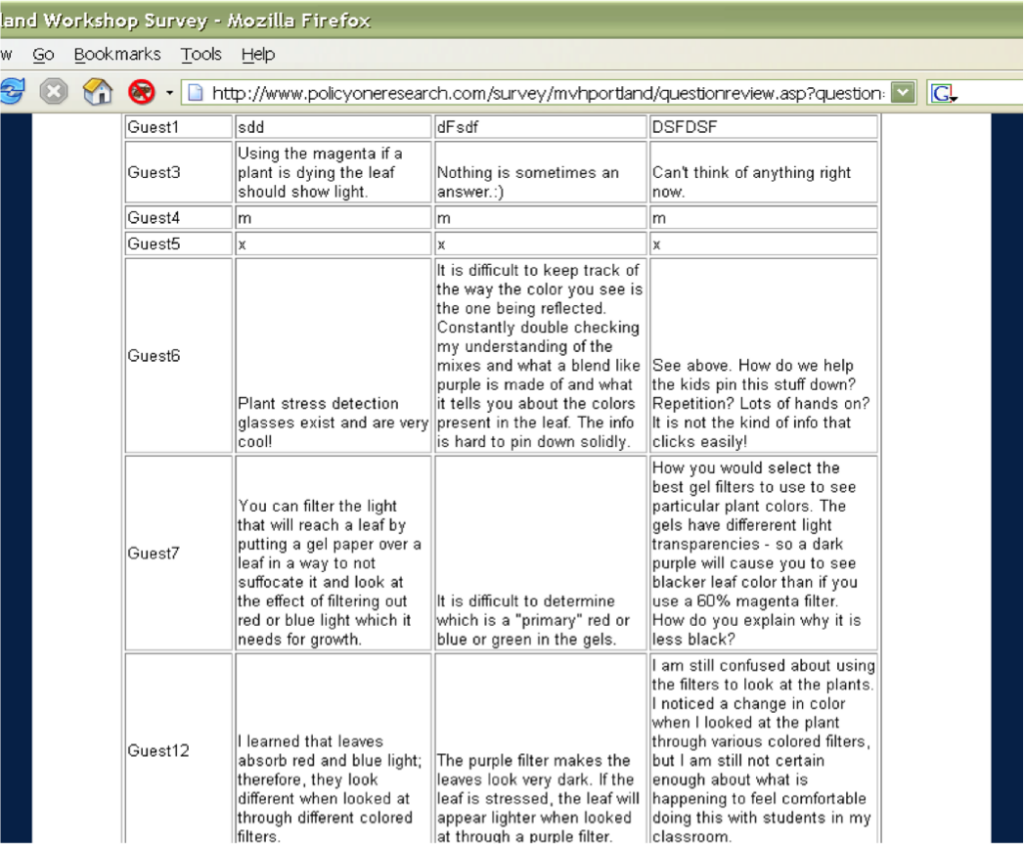

Sample of Reflective Writing

This example illustrates how the online, open-ended writings were used. The online survey results for open-ended questions were read and analyzed using existing question categories derived directly from instructional goals. For example, participants’ reflective writing from the learning station entitled “Using Light-emitting diodes (LED’s) and voltmeters to measure light energy” would be coded initially with the words “use of LED’s,” “use of voltmeters,” and “measurement of light energy”. As the text was read there were new coding categories added to match the participants’ narrative, such as “this is never understood this concept”.

2. Online Survey Questions

The online assessment was embedded in the agenda of the working groups so that participants respond to reflective writing prompts about learning activities and presentations immediately. Working group evaluators process this information and provide immediate feedback to presenters and organizers. For each working group the online assessment consisted of questions about:

- participant background (fixed response)

- content preparation and confidence to teach DEW concepts (fixed response),

- teaching practices and computer technology capacity for teaching and learning (fixed response),

- reflective questions about DEW concepts and resources following specific working group activities (open-ended),

- suggestions for improvements of DEW concepts and resources (open-ended),

- effects of DEW activities on understanding of DEW concepts (open-ended).

Participants were asked to respond to questions approximately 3-4 times per day, at pre-determined intervals. Participants were given ten minutes to complete the assigned online writing assessment. The online assessment also allowed for participants to review and revise responses during the working group; s/he could go back to questions previously answered, but they could not go forward unless they responded to all questions. The goal was for total completion of the online survey, but the final responses of some participants could be submitted at his/her convenience during the evening or in-between sessions.

Table 2: Comparison of Questions About Confidence to Teach Science Content: Pre- and Post-Working Group. (Based on a 5-point Likert scale, 1=Strongly Disagree to 5=Strongly Agree)

3. Participant-observation and Focus Group Interviews

As the primary DEW evaluator, Jeff Beaudry attended all of the working groups as a participant-observer, participating directly in learning stations and inquiry as a citizen scientist, and conducting informal individual interviews, as well as formal focus group interviews. He began the series of working groups as a novice science learner with limited understanding of DEW concepts like remote sensing data, image analysis software, and using light and color to understand plant and environmental health. However, Jeff has extensive background in scientific photography, with an Associate of Arts in Bio-medical Photography. Jeff has taught courses in cameras, color filters and light. In addition to the working groups, I attended several Forest Watch Workshops at UNH. My role as the lead evaluator included management and technical assistance of the online survey, facilitator of focus group discussion, informal interviews of participants, and debriefing interviews of DEW presenters. Field notes were written to summarize focus groups, interviews and debriefing. Furthermore, I participated directly in a number of learning stations and activities at working groups in Indiana State University, Lawrence Hall of Science, Museum of Science and the University of Southern Maine. In each case, I sat with participants throughout the working group schedule to develop a working trust with participants, to participate as an adult learner, and to observe participants interactions with the DEW tools and resources as closely as possible. Other evaluators were included in several of the working groups. Two PolicyOne evaluators attended the UNH working group, an intern from the Museum of Science, Boston attended the ISU working groups, and a research assistant attended the working groups at the Museum of Science, Boston and the University of Southern Maine. All of the evaluators wrote field notes and participated in extensive debriefing with the lead evaluator after the working groups.

4. Participants’ Content Learning

Multiple methods were used to include participants’ understanding of key DEW concepts and the challenges of using DEW concepts in their formal and informal science education work. All responded to a set of questions at the beginning, during and at the conclusion of the working group that focused the content learning of the working group. An initial battery of questions asked about teachers’ understanding of key concepts of the DEW grant, such as light and color, reflectance and absorbance of light, use of digital and satellite imagery, image analysis, and inquiry.

Figure 4: Using Color Filters to Understand and Measure Plant Health

A consistent pattern for middle and high school educators was that key DEW concepts were not familiar topics. In pre-working group questions participants indicated that they had little background in key DEW topics like using light to look at plants and remote sensing and spectrographic measurement. At the conclusion of each working group, a parallel series of questions asked whether teachers improved their understanding of DEW science content. Participants rated their learning of key DEW topics at the working group very high. These responses indicate that teachers learned more about the topics presented, particularly using plants to look at plants, light and color and cameras as data collection tools. Mean scores for the UNH and LHS respondents were at least 4.11 or higher, indicating strong agreement of improved understanding of content. While the working groups were instrumental at increasing confidence with this content, comments from the

Issues in content identified in open response questions were as follows:

- Light is a specific topic usually taught in physics

- Color is a specific topic usually taught in physics; the use of spectroscopy to analyze matter may be done in chemistry

- The use of color filters to study the health of plants and trees is not there

- Study of trees and plants may be found in programs like Forest Watch and Project Learning Tree

- Teachers like the basic concept of Forest Watch: specific trees and plants can be used as indicator species. Forest Watch uses white pine and now has added maple trees.

- Photosynthesis is the topic found in middle and high school, but is taught as a chemical reaction, not integrated with the concept of plant and tree health

V. Recommendations for Future Working Groups

* Clarify the purpose of the working groups, understanding plants as indicators of environmental conditions; more explicit connections with the environmental questions of how vegetation health generates questions about local, regional and global environments

* Balance the need for participants to explore ideas and generate their own questions about plants as indicators of environmental conditions with the structure necessary for learners to organize complex ideas in the DEW grant

* Focus and revise learning centers – remove some of the materials and equipment, add directions and labels; break down learning centers into smaller, more focused units, with more emphasis on digital cameras as remote sensing, data collection devices

* Model ways for teachers to use digital cameras – have sets of digital images ready for analysis, require teachers to bring digital images

* Review materials to begin the process of designing accommodations for learners with special needs

* Continue to develop model Investigations of a wide variety – teachers will use the satellite imagery, especially if it includes their own geographical location; each working group location should have pre-made sets of remote sensing data (satellite imagery, photographs, etc); encourage students and teachers to contribute their local Investigations to the DEW resource collection

* Develop long-term plans for communications with and support of working groups

* Develop strategies for reviewing and evaluating learning activities to meet standards of quality, classroom assessment

* Incorporate concept mapping software directly into web site, working groups and learning centers; content for light sources, filters, and digital cameras should have concept map templates

* Catalog learning activities and make the connections with state standards in science, geography, and mathematics for middle and high school students

* Make explicit connections with the Global System Science materials

* Explore strategies to create ongoing learning communities with participating teachers and DEW faculty; incorporate the work of teachers involved in the first round of working groups into the design of subsequent working groups

References

Beaudry, J. (2005). Summary of the UNH and ISU Working Groups – UNH, April26-28, 2005, ISU, June 8-10, 2005.

Borko, H. (2004). Professional development and teacher learning: Mapping the terrain. Educational Researcher, 33(8), pp. 3-15.

Engle, R. and Conant, F. (2002). Guiding principles for fostering disciplinary engagement: Explaining an emergent argument in a community of learners classroom. Cognition and Instruction, 20(4), pp. 399-483.

Kennedy, L. and Beaudry, J. (April, 2006). Using formative assessment tools to understand teacher learning. Paper presented to the Annual Meeting of the Association of Technology – Science Centers.

Rogan, B., Gould, A., and Rock, B. (2006). The Interim Report April 1 to July 31, 2006.

Sheperd, L. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), pp. 4-14.

Smith, C., Wiser, M., Anderson, C., Krajcik, J. and Coppola, B. (2004). Implications of research on children’s learning for assessment: Matter and atomic molecular theory. Paper commissioned by the Committee on Test Design for K-12 Science Achievement, Center for Education, National Research Council.